КОНСПЕКТ ЛЕКЦИЙ

по дисциплине

«Информационно-коммуникационные технологии»

для студентов всех специальностей КарГТУ

Караганда 2018

The role of ICT in modern society

The twentieth century was the century of industry. The main result of the century was the transition to the machine production of goods and synthetic materials, the creation of conveyor production lines and automatic factories. The most significant inventions in the 20th century were electric lamps, cars and telephones, planes and radio, television and antibiotics, refrigerators, computers and mobile phones.

The twenty-first century became the century of information and communication technologies (ICT). The current stage of the development of the world community is characterized by a wide application of information and communication technologies.

Technical progress and the emergence of new devices and technologies require continuous improvement and development from information technology specialists. The processes associated with the automation of enterprises, the introduction of information systems today is one of the most perspective and popular in all spheres of human activity. Thanks to the rapid development of the information technologies and the Internet, now there is open access to political, financial, scientific and technical information. ICT now in many ways determine the country's scientific and technical potential, the level of development of its economy, the way of life and human activity.

ICT plays an important role in modern education. The use of images and video makes classes more attractive, which can lead to better learning of the material from students. ICT allow you to individualize the learning process, choose the pace of training, re-return to the training material, check the level of knowledge. Each teacher should possess the simplest ICT skills and be able to apply them in practice, and in the long term to strive to acquire all kinds of knowledge in the use of ICT in their professional activities.

The main advantages of ICT in education

1. Images and videos can be used in the teaching process to improve the memorization of material for students.

2. Teachers can easily explain complex objects and processes, while providing a greater understanding of students.

3. The use of interactive classes makes classes more attractive, which can lead to better attendance and concentration of students.

The most prestigious professions are related to the processing of information. Anyone who is interested in achieving success in the profession should be able to collect, analyze, study and present information.

Types of Information technologies

The main types of information technology include:

1. IT data processing.

2. IT automated office.

3. IT management.

4. IT decision support.

5. IT expert systems.

IT data processing

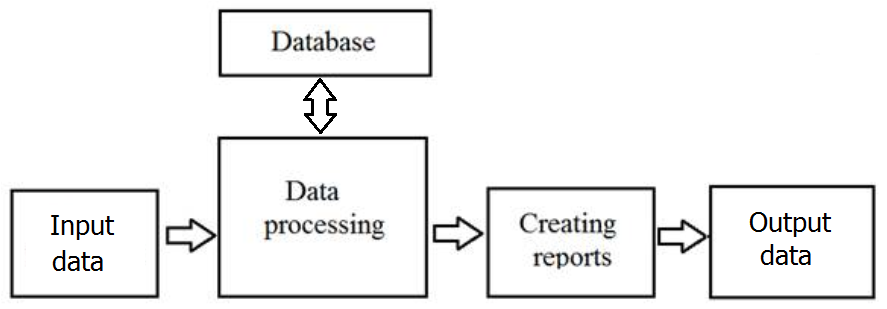

Information technology of data processing is intended for solving problems in which there are input data and known algorithms and data-processing procedures (fig. 1.1).

Figure 1.1 – IT data processing

Data collection

All enterprises produce products or provide services. In this case, each action must be accompanied by records that are stored in the database.

For example, when hiring an employee, information about him is recorded in the database. The receipt, storage and sale of goods is also recorded in the database.

So, in order to analyze the data, you must first get it.

Data processing

Data processing makes it possible to obtain new data. There are many events every day Inside the enterprise, but only a few of them are of interest to management.

For example, translating absolute values of trade turnover into relative values in percent represents useful information.

Data processing is performed by the DBMS with the help of arithmetic and logical operations. On output, the DBMS information is provided in the form of reports.

Data processing operations include:

• sorting;

• grouping;

• calculations;

• Aggregation (consolidation).

Creating reports

In information technology of data processing it is necessary to create documents for employees of firm and management, and also for external partners.

At the same time, documents are created in connection with the operation carried out by the firm and periodically at the end of each month, quarter or year.

IT automated office

Office automated technologies are used by managers, specialists, secretaries and clerical employees. They help to increase the productivity of secretaries and clerical workers and enable them to cope with the increasing volume of work. Currently, there are many software products for office automation:

• Word processor.

• Table processor.

• Database.

• Email.

• Electronic calendar.

• Teleconferences.

• Other programs.

An important component of an automated office is the database.

In the automated office, the database concentrates the data about the production system of the firm in the same way as in the data processing technology.

Information in the database can also come from the external environment of the firm. Specialists should have the basic technological operations to work in the database environment.

IT management

The purpose of information technology management is to meet the information needs of company employees dealing with decision-making.

For decision-making, the information should be presented in such a way that the trends of data changes, the reasons for the deviations that have occurred are viewed.

IT management solves the following tasks of data processing:

• evaluation of the planned state of the facility;

• assessment of deviations from the planned state of the facility;

• identification of causes of deviations;

• Analysis of possible solutions and actions.

IT decision support

The main feature of information technology for decision-making support is a qualitatively new method of organizing human-computer interaction.

The solution is developed as a result of the iterative process of the person and the computer. The final decision is made by the person.

IT decision support can be used at any level of management. Therefore, an important function is the coordination of decision-makers at different levels of government.

IT expert systems

Expert systems are based on the use of artificial intelligence. The main idea of using the technology of expert systems is to get knowledge from the expert and use it whenever you need it.

Solving special problems requires special knowledge. However, not every company can afford to keep in its staff experts on all issues related to its work, when the problem arose.

Expert systems are computer programs that transform the experience of experts in any field of knowledge into the form of heuristic rules (heuristics).

The main components of information technology used in the expert system are: knowledge base, interpreter, system creation module.

Knowledge base. It contains facts that describe the problem area, as well as the logical interrelation of these facts. The central place in the knowledge base belongs to the rules.

The rule determines what to do in a given situation, and consists of two parts: a condition that can be satisfied or not, and an action that must be performed if the condition is met.

Interpreter. This is part of the expert system, which, in a certain order, processes knowledge stored in the knowledge base.

Module for creating a system. It serves to create a set of rules. There are two approaches that can be used as a basis for the system creation module: the use of algorithmic programming languages and the use of shells of expert systems.

Applied Informatics

The achievements of modern informatics are widely used in various fields of human activity:

- automated systems for scientific research;

- automated design systems;

- automated information systems;

- automatic control systems;

- automated learning systems.

Artificial intelligence - the field of computer science, specializing in modeling the intellectual and sensory abilities of a person.

This section of informatics solves the most complicated problems associated with mathematics, medicine, psychology, technology and other sciences.

Artificial intelligence and robotics are two areas that are now the most actively developing areas of science and technology. New technologies provide many benefits to a number of industries, not just the manufacturing sector where they are already used.

Companies that think in the future are entering a new scientific and technological revolution (Industry 4.0) and are adopting artificial intelligence and robotics. For example, virtual interlocutors (chatbots). Organizations are increasingly using artificial intelligence so that computers can understand the requests from customers and respond to them. At the same time, financial institutions use automation to handle increasing amounts of data.

According to international reports, 5.1 million jobs will be replaced by robots by 2020, and companies using automation and new technologies will be able to free up resources for the development of innovative ideas.

The British research organization RSA has published a study on how automation and artificial intelligence can affect the industry in the future. On the one hand, modern technologies can lead to higher unemployment and lower wages. On the other hand, artificial intelligence and robotics should improve productivity by making British enterprises more competitive, encouraging higher wages and a gradual reduction in routine, dangerous and harmful jobs.

ICT Standards

Standardization - the adoption of an agreement on the specification, production and use of hardware and software of computing equipment, the establishment and application of standards, norms, rules, etc.

Standardization in the field of ICT is aimed at increasing the degree of conformity to its functional purpose of the types of information technologies that make up their components and processes. At the same time, technical barriers to international information exchange are eliminated.

The standards provide an opportunity for information technology developers to use data, software, communication tools from other developers, to integrate various components of information technology.

For example, interprogram interface standards are intended for regulating the interaction between different programs (one of them is the standard of OLE technology (Object Linking and Embedding). Without such standards, software products would be "closed" to each other.

User requirements for standardization in the field of information technology are implemented in standards for the user interface, for example, in the GUI (Graphical User Interface) standard.

Standards occupy an increasingly important place in the direction of the development of the information technology industry. More than 1000 standards have already been adopted by standardization organizations, or are in the process of development. The process of standardizing information technology is not yet over.

In 2009, the initiative of the European Commission led to the fact that mobile phone manufacturers were able to harmonize a standardized charger using the micro-USB connector.

Standards can contribute in principle to improving the impact of the information and communication technologies (ICT) sector on sustainable development.

ICT standards contain requirements for computer hardware, networks, software, databases, information systems, information coding and security.

With the advent of new generations of technical devices and software, there is a problem of compatibility, including functional, technical, information and communication.

This problem is solved by the methodology of open systems, currently supported by major computer equipment manufacturers, for example, such as Hewlett-Packard, IBM, Sun Microsystems.

An open system is a system that consists of components that interact with each other through standard interfaces.

The history of the concept of open systems starts from the moment when there was a problem of portability (mobility) of programs and data between computers with different architectures.

One of the first steps in this direction, which influenced the development of computer technology, was the creation of IBM 360 series computers that have a single set of commands and can work with the same operating system.

Mobility was also ensured by the fact that these standards were adopted by many manufacturers of various platforms.

The solution to the problems of compatibility and mobility has led to the development of a large number of international standards in the field of application of information technology and the development of information systems.

The notion of an "open system" became the basic, basic concept in the use of standards.

Interaction

The ability to interact with other application systems (technical means on which the information system is implemented, are combined by a network or networks of different levels - from local to global).

Standardization

IP is designed and developed on the basis of agreed international standards and proposals, the implementation of transparency is based on functional standards in the field of information technology.

Sscalability

The ability to move application programs and transfer data in systems and environments that have different characteristics and functionality.

The ability to add new IP functions or change some of the existing ones with the remaining remaining functional parts of the IC.

Mobility

Providing the ability to transfer applications and data while upgrading or replacing IP hardware platforms and the ability of IT professionals to work with them without their special retraining in IP changes.

User-friendly

Unified interfaces in the process of interaction in the system "user-computer", allowing to work for a user who has no special training.

ICT standards contain requirements for computer hardware and networks, information and software, databases, information systems.

These include life-cycle standards, open system interconnections, as well as standards for software documentation and ICT security.

Computer Architecture

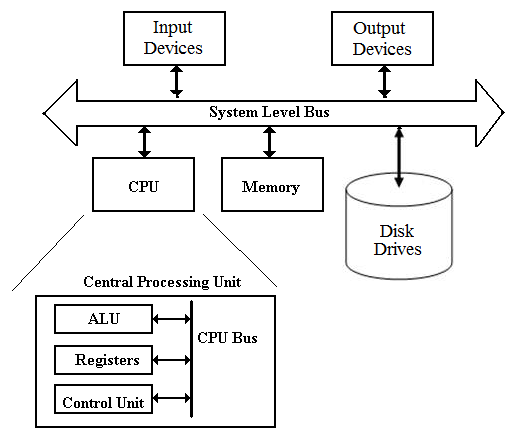

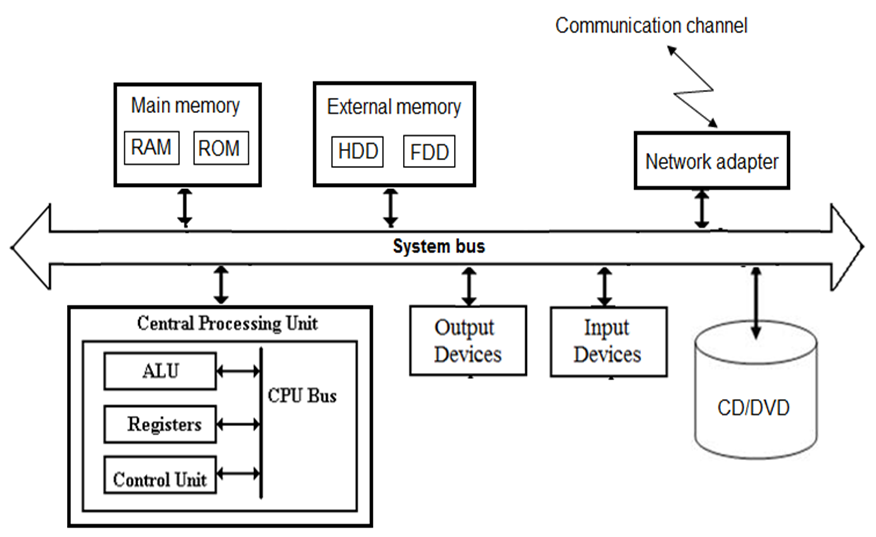

Modern computer system includes a CPU, main memory, disk drives, input and output devices (fig. 2.1).

Figure 2.1 – Computer structure

The CPU executes the commands and controls other computer devices. Main memory (RAM) contains the currently running programs and data. The external memory stores programs and data for future use. Input devices convert data and instructions in a form suitable for processing in the computer.

Output devices present information in the form convenient for the user. Communication devices control the reception and transmission of data in local and global networks.

The principle of operation of the computer is execute programs. A computer program is a sequence of commands. The command is a description of the operation to be performed by a CPU. The command system of CPU consists of arithmetic, logical and other commands.

Computer Architecture is a set of general principles of hardware and software (instruction set, addressing modes, memory management, etc.), which determines the functionality of the computer to solve problems.

The architecture includes the following principles of construction of the computer:

1. The ways to access memory of a computer;

2. The ways to access external devices;

3. The command system of the processor;

4. Formats commands and data;

5. Organization of hardware interfaces.

The architecture of modern computers is based on a modular principle.

Communication between devices of the computer is carried out through the system bus (fig. 2.2).

A system bus is a single computer bus that connects the major components of a computer system.

Figure 2.2 – Communication between devices of the computer

The system bus consists of three groups of conductors:

• Data bus;

• Address bus;

• Control bus.

Additional peripheral devices can be connected to the system bus.

The peripheral device is connected to the system bus through the controller (adapter). To install the controllers on the motherboard there are special connectors (sockets). The peripheral device is controlled through the driver program.

Von Neumann's Principles

1. The principle of program management.

2. The principle of uniformity of memory.

3. The principle of addressability of memory.

4. The principle of using the binary number system.

At present the following architectural solutions are most common:

-Classical architecture (von Neumann architecture);

-SMP-systems (Symmetrical Multi Processor systems);

-NUMA-systems (Non-Uniform Memory Access systems);

-Clusters.

Classical architecture (von Neumann architecture). This is a single-processor computer. To this type of architecture is the architecture of a personal computer with a common bus (fig. 2.3).

Figure 2.3 – Classical architecture

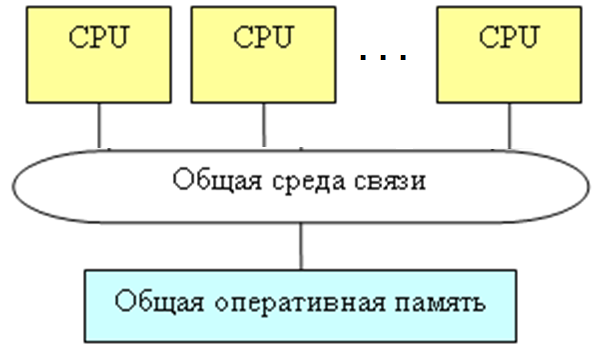

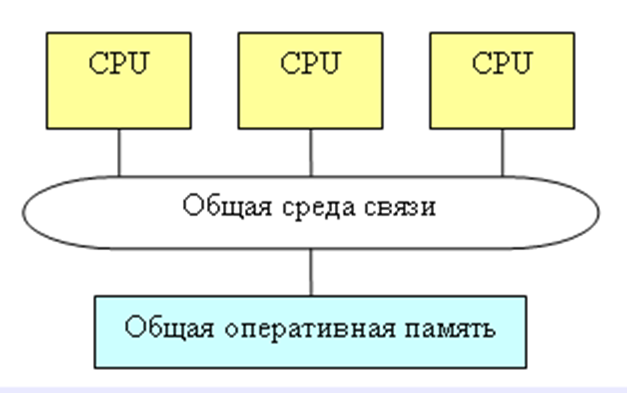

SMP-systems (Symmetrical Multi Processor systems). The presence in the computer of several processors means that in parallel the execution of several fragments of one task can be organized (fig. 2.4).

Figure 2.4 – Symmetrical Multi Processor systems

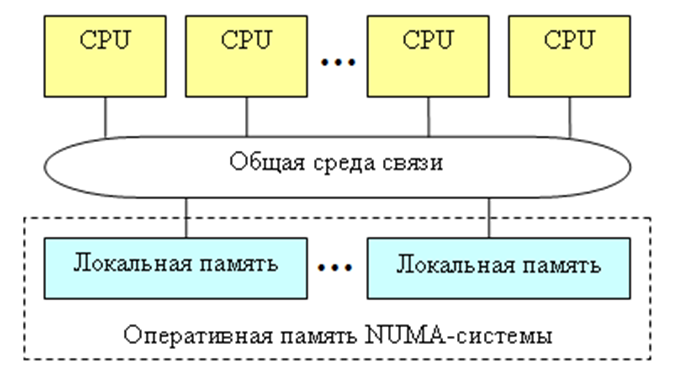

NUMA-systems (Non-Uniform Memory Access systems). In the system, each processor is attached to its local memory. Appeal to your local memory is much faster than to someone else's (fig. 2.5).

Figure 2.5 – Non-Uniform Memory Access systems

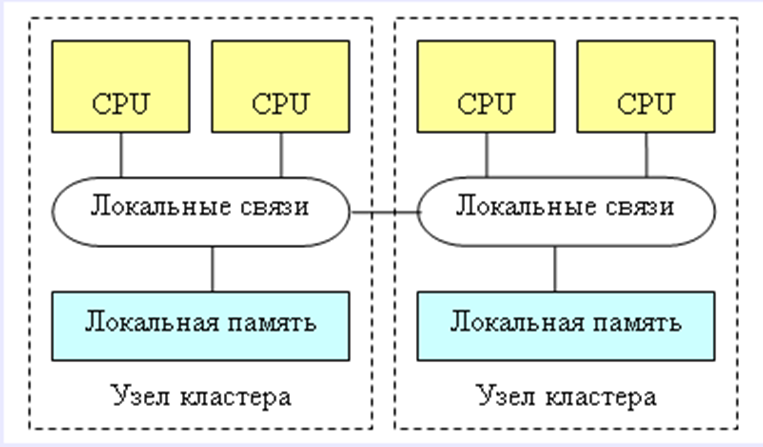

Clusters. Several independent multiprocessor computer systems are united by high-speed communication lines (fig. 2.6).

Figure 2.6 – Clusters

Second generation computers

In 1957, in computers were used semiconductor transistors, invented in 1948 by William Shockley. Was used the device for magnetic tape and magnetic drums. As software began to use the programming languages of high level.

Appeared the translators to translate the programs into machine code.

Third generation computers

In 1960 the first integrated circuits (IC) were used. IC (chip) are widely used because of the small size, but huge opportunities. One chip can replace tens of thousands of transistors. In 1964 the first third generation computer of IBM System/360 series was created. IBM/360 was the first 32-bit computer system. The operating system OS/360 is the basis of modern operating systems.

The hexadecimal number system, widely used in the documentation of IBM/360. In 1964 came the first monitor for computers - IBM. It was a monochrome display with a screen of 12 x 12 inches.

Fourth generation computers

In the fourth generation computers used microprocessors. With the advent of the microprocessor begins a new era of computing. Computers built on the basis of microprocessors called micro-computer. They are placed on desktop, easy to use and doesn’t require special conditions. In 1981, IBM released the first batch of personal computers. The main advantage of the PC - the availability for work to unqualified users in the field of computer technology.

Human-computer interaction

User Interface

Interface - a set of technical, software and methodological protocols, rules, agreements in the computing system of users with devices and programs, as well as devices with other devices and programs.

Interface - in a broad sense, this is the way of interaction between objects. The interface in the technical sense specifies the parameters, procedures and characteristics of the interaction of objects.

Currently, the following types of interfaces are distinguished:

- User interface. It is a set of methods of interaction between the computer program and the user.

- The program interface. It is a set of methods for interaction programs.

- A physical interface is a way of interacting physical devices. Most often we are talking about computer ports.

The user interface is a set of software and hardware that allow the user to interact with the computer. The basis of this interaction is the dialogue. Dialogue in this case is understood as a regulated exchange of information between a person and a computer, carried out in real time and aimed at the joint solution of a specific task.

Programmers create a set of different windows, forms, menus, buttons, icons, help systems, etc., creating a user interaction interface with a computer program. Most of the user data processing tasks involve the use of process control tools through the interface. The main task of such an interface is to enable the user to work effectively with information.

The user interface in information technology is the elements and components of the program that affect the interaction of the user with the software; This is a collection of rules, methods and software and hardware that provide user interaction with the computer. The user interface is often perceived only as the appearance of the program. In fact, it includes all aspects that affect the interaction of the user and the system, and is primarily determined by factors such as the nature and set of user tasks that he solves with the help of the system, as well as the capabilities of the system's computing resources.

User interface means the environment and the method of communication between a person and a computer (a set of techniques for interacting with a computer). He is often identified with a dialogue that is similar to a dialogue or interaction between two people. The user uses specific actions that are part of the dialog. These dialog actions do not always require the computer to process information. They may be needed to organize the transition from one panel to another or from one application to another, if more than one application is running. The user interface also includes training programs, reference material, the ability to customize the appearance of programs and menu content to meet the needs of users and other services. This includes design, step-by-step tips and visual cues.

Once a well-designed user interface helps to save time for users and developers. At the same time for the user reduces the time of studying and using the system, reduces the number of errors, there is a feeling of comfort and confidence. The developer can allocate common interface blocks, standardize individual elements and rules of interaction with them, shorten the time of system design. These blocks allow programmers to create and modify applications more easily and quickly. For example, if one panel can be used in many systems, application developers can use the same panels in different projects.

The CS interface as a user management tool for the search process is a complex of components (operations and technological objects) that can be combined into blocks according to a functional or other principle and implemented as a system of commands, menus, scenarios. In the presence of different categories of users, the system must have the means to select a user interface that meets the requirement of necessary and sufficient complexity reduction for users of different categories. To enable users to manage the search process, they need to provide special tools to organize the search, as well as access to previously obtained objects and results.

Initially, the main focus of research on human-computer interaction was the physical interaction of the user with a computer or other device. Human motion patterns have been studied, for example, parameters such as the time required to click an object of a certain size, or the speed of text input using a mobile phone keyboard.

In the famous book "The Psychology of Human-Computer Interaction" written by scientists Stuart Card, Thomas Moran and Allen Newell, published in 1984, a person is viewed as an information processor (information processor) capable of entering information (mainly visual), its Processing (mental) and output (printing on the keyboard, mouse clicks), passing into computer input. Ultimately, it was thanks to this approach that a modern graphical user interface appeared.

In the near future, human-computer interaction will inevitably change. The pervasive penetration and embedding of technologies makes enormous changes in the computer interface itself. These changes are needed both to make it easier for the mass consumer to use a variety of computers, and so that citizens and society can get all the benefits of computer technology in general without working directly with computers.

Usability of interfaces

In recent years human society evolved from the “industrial society age” and transitioned into the “knowledge society age”. This means that knowledge media support migrated from “pen and paper” to computer-based Information Systems. Due to this fact Ergonomics assumed an increasing importance, as a science that deals with the problem of adapting the work to the man, namely in terms of usability.

Usability is a quality or characteristic of a product that denotes how easy this product is to learn and to use. But it is also a group of principles and techniques aimed at designing usable products, based on user-centred design.

Usability is the convenience of working with a program, site, or device for the user. The term usability refers to the general concept of ease of use of software, the logic and simplicity in the arrangement of controls.

The basis of usability is the design of the user interface. On how much quality will be the design of your program, very much depends. The design of the UI must meet certain standards. Having an original design is good, but having an original design that meets the standards is even better!

It is very important to have a uniform design in all parts of your program. Identify styles, colors, and interface elements and use them in the same way in your interface. Decide how to highlight the important elements and place them on the screen to simplify the user to perform their tasks. Determine the basic principles of the interface and follow them everywhere. Uniformity of the interface will reduce the amount of time and effort of the user to start working with it. When users have learned and understood your design concept, they will feel easy and confident in all parts of the interface.

The most important element of design is the color scheme. For example, the combination of "white text and black background" is now very unpopular and should not be used. Always remember that not all people have good eyesight, as well as patience - if the text is read with difficulty, then the program is likely to be closed. Define the colors for the main text and user input. Use these colors on all windows of your application.

Types of interfaces

Conventionally, most known interface solutions can be assigned to one of the following three groups:

1) Command interface. The command interface is called so by the fact that in this kind of interface the person submits "commands" to the computer, and the computer executes them and gives the result to the person. The command interface is implemented in the form of batch technology and command line technology.

2) WIMP - interface (Window - window, Image - image, Menu - menu, Pointer - pointer). A characteristic feature of this type of interface is that the dialogue with the user is not carried out with the help of commands, but with the help of graphical images - menus, windows, other elements. Although in this interface commands are given to the machine, but this is done "indirectly" through graphic images. This kind of interface is implemented on two levels of technology: a simple graphical interface and a "clean" WIMP interface.

3) SILK - interface (Speech - speech, Image - image, Language - language, Knowlege - knowledge). This kind of interface is the closest to the usual, human form of communication. Within the framework of this interface, there is an ordinary "conversation" between a person and a computer. At the same time, the computer finds commands for itself, analyzing human speech and finding key phrases in it. The result of executing commands, it also converts into a human-readable form. This type of interface is most demanding on the hardware resources of the computer, and therefore it is used mainly for military purposes.

An example of the command interface is the DOS command line interface or UNIX shell interpreter.

The second step in the development of the graphical interface was the WIMP interface. This type of interface is characterized by the following features.

1. All work with programs, files and documents takes place in windows - certain parts of the screen defined by the frame.

2. All programs, files, documents, devices and other objects are represented in the form of icons - icons. When you open the icons turn into windows.

3. All actions with objects are performed using the menu. Although the menu appeared at the first stage of the GUI development, it did not have a dominant value in it, but served only as an addition to the command line. In a pure WIMP interface, the menu becomes the main control element.

4. Wide use of manipulators to point to objects. The manipulator ceases to be just a toy - an addition to the keyboard, but becomes the main control element. Using the manipulator, POINTS on any area of the screen, windows or icons, SELECT it, and only then through the menu or using other technologies they manage them.

It should be noted that WIMP requires for its implementation a color raster display with high resolution and a manipulator. Also, programs oriented to this type of interface, raise the requirements for the computer's performance, the amount of its memory, the bandwidth of the bus, and so on. However, this kind of interface is most easy to learn and intuitive. Therefore, now the WIMP interface has become the de facto standard.

A vivid example of programs with a graphical interface is the Microsoft Windows operating system.

The emergence and wide distribution of the graphical user interface (GUI) was caused by the fact that users wanted to have an interface that makes it easy to master the basic procedures and work comfortably on the computer.

The graphical user interface is a graphical environment for the user's interaction with the computer system, which assumes the standard use of the main elements of the user's dialogue with the computer.

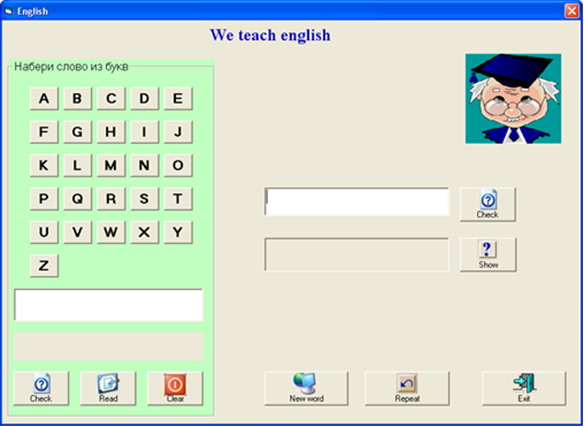

The graphical interface allows you to control the behavior of the computer system through visual controls: windows, lists, buttons, hyperlinks and scroll bars. It includes such concepts as: desktop, windows, icons, elements of the graphical interface, manipulation pointing device (mouse). These visual elements are created, displayed and processed using graphical applications (figure 4.1).

Figure 4.1 - Graphical user interface

Since the mid-1990s, after the appearance of inexpensive sound cards and the widespread use of speech recognition technologies, the so-called "speech technology" of the SILK interface appeared. With this technology, commands are given by voice by pronouncing special reserved words (commands). The words must be pronounced clearly, at the same pace. There is a pause between words. Due to the underdevelopment of the speech recognition algorithm, such systems require an individual pre-setting for each specific user.

Voice control

It should be noted that the voice output of the information is quite accessible, the computer can speak. Much more difficult with voice input. It is believed that problems with word recognition are almost solved. Of course, there are language features, but when tuning to a specific language and adjusting to the features of a particular user's speech, recognizing words now does not seem a huge problem. For example, Apple introduced the Siri system with its iPhone 4S smartphone to recognize voice commands. Currently, voice control is suitable for more or less simple situations, for example, for an "intelligent" house.

Gesture control

This kind of management became known to users first of all thanks to the device Microsoft Kinect, which, by the way, will soon be released not only for the Xbox game console, but for a regular computer and other electronic devices. Currently, gesture management is most suitable for managing various media devices.

Advantages of managing gestures: contactless control; The intuition of a large number of teams. Disadvantages of managing gestures: the need for initial initiation; Low accuracy of recognition of gestures; The need to memorize non-obvious gestures; High probability of errors; Lack of common standards for the application and display of gestures.

Neurointerface

The advantages of a neural interface: potentially the fastest command input; Potentially the ability to give commands of any complexity; Free hands and eyes. Disadvantages of the neurointerface: a complex implementation; Additional devices (sensors, helmets, etc.); High probability of errors; Special attention to safety when managing real objects; Need to calibrate for a specific user.

In recent years, many new technologies have appeared, which in the future will radically change the look of user interfaces. Suffice it to recall Siri and Google Glass - these are harbingers of a new stage in the development of interfaces.

Today, MIT Media Lab employees are working on the creation of fluid interfaces that allow information to flow freely from one storage system to another.

For example, a device created in the MIT Media Lab called Finger Reader helps blind people read books by simply swiping fingers along the lines. FineReader reads the text and makes its acoustic processing. At the same time, not only audio but also tactile interaction is realized - the device vibrates, marking punctuation marks, spaces between words, and announcing the end of the sentence. Thus, the information flows from the analog storage system (book) to the digital (FingerReader), and then it is voiced (voice) and assimilated by the user. In this case, the human body itself acts as an interface - the device only tells what to do.

The development of the Internet of things will inevitably lead to the fact that many devices will not have interfaces that are familiar to us at all. For example, user identification will be performed by scanning the iris or fingerprint, and the information will come in the form of voice messages or holography. That is, the interface of the future generally will not need a physical medium such as a computer or a smartphone.

Database systems

Bases of database systems

One of the most important applications of computers is the processing and storage of large amounts of information in various areas of human activity: in the economy, banking, trade, transport, medicine, science, etc.

Modern information systems are characterized by huge amounts of stored and processed data.

Information system is a system which performs automated collection, processing and presentation of data. The information system includes hardware, software and maintenance personnel. Databases are the basis of information systems.

The database is an organized set of data to ensure their effective storage, retrieval and processing in a computer system under the control of a DBMS (database management system).

A database can be of any size and of varying complexity.

A database may be generated and maintained manually or it may be computerized. A computerized database may be created and maintained either by a group of application programs written specifically for that task or by a database management system.

A database management system is a collection of programs that enables users to create and maintain a database.

The DBMS is hence a general-purpose software system that facilitates the processes of defining, constructing, manipulating, and sharing databases among various users and applications.

The database system contains not only the database itself but also a complete definition or description of the DB structure and constraints.

This definition is stored in the DBMS catalog, which contains information such as the structure of each file, the type and storage format of each data item, and various constraints on the data.

The information stored in the catalog is called meta-data, and it describes the structure of the primary database.

The architecture of DBMS packages has evolved from the early monolithic systems, where the whole DBMS software package was one tightly integrated system, to the modern DBMS packages that are modular in design, with a client/server system architecture.

In a basic client/server DBMS architecture, the system functionality is distributed between two types of modules. Now we specify an architecture for database systems, called the three-schema architecture that was proposed to help achieve and visualize these characteristics.

Data models

By structure of a database, we mean the data types, relationships, and constraints that should hold for the data.

Most data models also include a set of basic operations for specifying retrievals and updates on the database. In addition to the basic operations provided by the data model, it is becoming more common to include concepts in the data model to specify the dynamic aspect or behavior of a database application. This allows the database designer to specify a set of valid userdefined operations that arc allowed on the database objects.

Common logical data models for databases include:

- Hierarchical database model;

- Network model;

- Relational model;

- Object-oriented model;

- Multidimensional model.

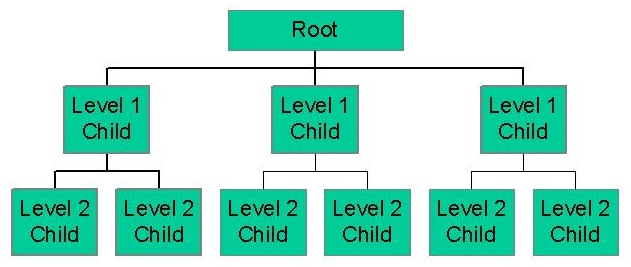

The hierarchical model organizes data into a tree-like structure, where each record has a single parent or root (fig. 5.1). Sibling records are sorted in a particular order. That order is used as the physical order for storing the database.

This model is good for describing many real-world relationships.

This model was primarily used by IBM’s Information Management Systems in the 60s and 70s, but they are rarely seen today due to certain operational inefficiencies.

Figure 5.1 - The hierarchical model

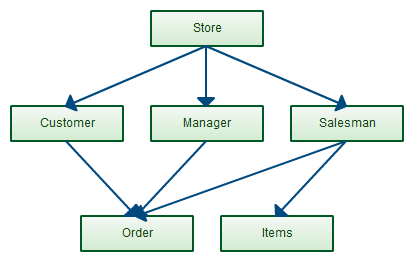

The network model builds on the hierarchical model by allowing many-to-many relationships between linked records, implying multiple parent records (fig. 5.2). Based on mathematical set theory, the model is constructed with sets of related records. Each set consists of one owner or parent record and one or more member or child records. A record can be a member or child in multiple sets, allowing this model to convey complex relationships.

It was most popular in the 70s after it was formally defined by the Conference on Data Systems Languages (CODASYL).

Figure 5.2 - The network model

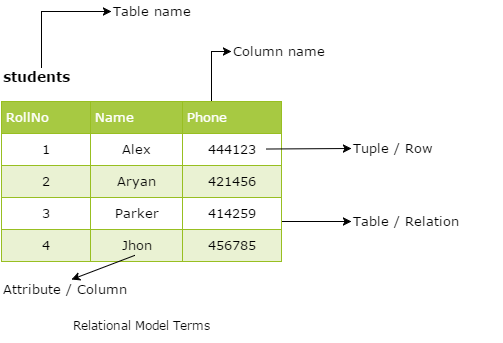

The most common model, the relational model sorts data into tables, also known as relations, each of which consists of columns and rows (fig. 5.3). Each column lists an attribute of the entity in question, such as price, zip code, or birth date. Together, the attributes in a relation are called a domain. A particular attribute or combination of attributes is chosen as a primary key that can be referred to in other tables, when it’s called a foreign key.

Each row, also called a tuple, includes data about a specific instance of the entity in question, such as a particular employee.

Figure 5.3 - The relational model

The model also accounts for the types of relationships between those tables, including one-to-one, one-to-many, and many-to-many relationships.

Within the database, tables can be normalized, or brought to comply with normalization rules that make the database flexible, adaptable, and scalable. When normalized, each piece of data is atomic, or broken into the smallest useful pieces.

Relational databases are typically written in Structured Query Language (SQL). The model was introduced by E.F. Codd in 1970.

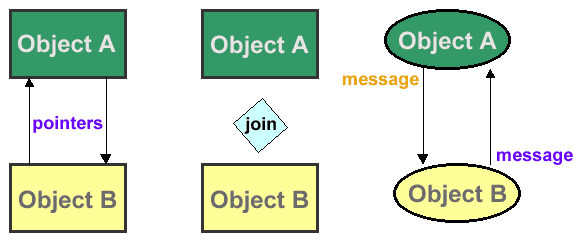

Object-oriented database model defines a database as a collection of objects, or reusable software elements, with associated features and methods (fig. 5.4).

The object-oriented database model is the best known post-relational database model, since it incorporates tables, but isn’t limited to tables. Such models are also known as hybrid database models. This models combines the simplicity of the relational model with some of the advanced functionality of the object-oriented database model. In essence, it allows designers to incorporate objects into the familiar table structure.

Figure 5.4 - Object-oriented database model

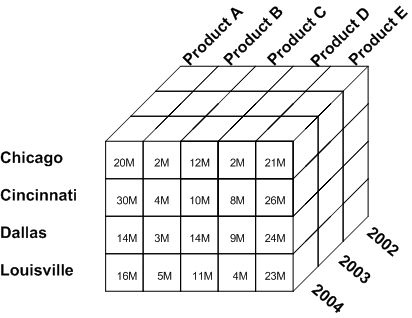

Multidimensional model is a variation of the relational model designed to facilitate improved analytical processing (fig. 5.5). While the relational model is optimized for online transaction processing (OLTP), this model is designed for online analytical processing (OLAP).

Each cell in a dimensional database contains data about the dimensions tracked by the database. Visually, it’s like a collection of cubes, rather than two-dimensional tables.

Figure 5.5 - Multidimensional model

Normalization

Normalization is the process of efficiently organizing data in a database.

There are two goals of the normalization process: eliminating redundant data (for example, storing the same data in more than one table) and ensuring data dependencies make sense (only storing related data in a table).

Both of these are worthy goals as they reduce the amount of space a database consumes and ensure that data is logically stored.

The normalization process, as first proposed by Codd (1972), takes a relation schema through a series of tests to "certify" whether it satisfies a certain normal form.

The database community has developed a series of guidelines for ensuring that databases are normalized.

These are referred to as normal forms and are numbered from one (the lowest form of normalization, referred to as first normal form or 1NF) through five (fifth normal form or 5NF). In practical applications, you'll often see 1NF, 2NF, and 3NF.

First normal form (1NF) sets the very basic rules for an organized database:

- eliminate duplicative columns from the same table;

- create separate tables for each group of related data and identify each row with a unique column or set of columns (the primary key).

Second normal form (2NF) further addresses the concept of removing duplicative data:

- meet all the requirements of the first normal form;

- remove subsets of data that apply to multiple rows of a table and place them in separate tables;

- create relationships between these new tables and their predecessors through the use of foreign keys.

Third normal form (3NF) goes one large step further:

- meet all the requirements of the second normal form;

- remove columns that are not dependent upon the primary key.

Fundamentals of SQL

The most common query language used with the relational model is the Structured Query Language (SQL).

SQL defines the methods used to create and manipulate relational databases on all major platforms.

The SQL language includes the following components:

• The Data Definition Language (DDL);

• The Data Manipulation Language (DML);

• The Data Control Language (DCL).

Note that these are not separate languages, but different commands of the same language. Such division is carried out only for various functional purpose of these commands.

The data definition language is used to create and modify the structure of the database and its components - tables, indexes, virtual tables, and procedures. For example, the command:

CREATE DATABASE employees

CREATE TABLE personal_info (first_name char(20) not null, last_name char(20) not null, employee_id int not null)

ALTER TABLE personal_info

ADD salary money null

The data manipulation language is used to manipulate data in database tables. For example, the command:

SELECT *

FROM personal_info

UPDATE personal_info

SET salary = salary * 1.03

DELETE FROM personal_info

WHERE employee_id = 12345

The data control language is used to manage access rights to data and execute procedures in a multi-user environment. For example, the command:

GRANT SELECT, UPDATE ON employee TO hr;

REVOKE UPDATE ON employee TO hr;

Knowledge Discovery

Some people treat data mining same as knowledge discovery, while others view data mining as an essential step in the process of knowledge discovery. Here is the list of steps involved in the knowledge discovery process:

- Data Cleaning;

- Data Integration;

- Data Selection;

- Data Transformation;

- Data Mining;

- Pattern Evaluation;

- Knowledge Presentation.

Data Integration

Data Integration is a data preprocessing technique that merges the data from multiple heterogeneous data sources into a coherent data store. Data integration may involve inconsistent data and therefore needs data cleaning.

Data Cleaning

Data cleaning is a technique that is applied to remove the noisy data and correct the inconsistencies in data. Data cleaning involves transformations to correct the wrong data. Data cleaning is performed as a data preprocessing step while preparing the data for a data warehouse.

Data Selection

Data Selection is the process where data relevant to the analysis task are retrieved from the database. Sometimes data transformation and consolidation are performed before the data selection process.

Clusters

Cluster refers to a group of similar kind of objects. Cluster analysis refers to forming group of objects that are very similar to each other but are highly different from the objects in other clusters.

Data Transformation

In this step, data is transformed or consolidated into forms appropriate for mining, by performing summary or aggregation operations.

Data Mining

In this step, intelligent methods are applied in order to extract data patterns.

There are two forms of data analysis that can be used for extracting models describing important classes or to predict future data trends:

- Classification;

- Prediction.

Classificationmodels predict categorical class labels; and prediction models predict continuous valued functions.

For example, we can build a classification model to categorize bank loan applications as either safe or risky, or a prediction model to predict the expenditures in dollars of potential customers on computer equipment given their income and occupation.

Following are the examples of cases where the data analysis task is Classification:

- a bank loan officer wants to analyze the data in order to know which customer (loan applicant) are risky or which are safe;

- a marketing manager at a company needs to analyze a customer with a given profile, who will buy a new computer.

In both of the above examples, a model or classifier is constructed to predict the categorical labels. These labels are risky or safe for loan application data and yes or no for marketing data.

Following are the examples of cases where the data analysis task is Prediction.

Suppose the marketing manager needs to predict how much a given customer will spend during a sale at his company.

In this example we are bothered to predict a numeric value. Therefore the data analysis task is an example of numeric prediction.

In this case, a model or a predictor will be constructed that predicts a continuous-valued-function or ordered value.

With the help of the bank loan application that we have discussed above, let us understand the working of classification.

The Data Classification process includes two steps:

- Building the Classifier or Model;

- Using Classifier for Classification.

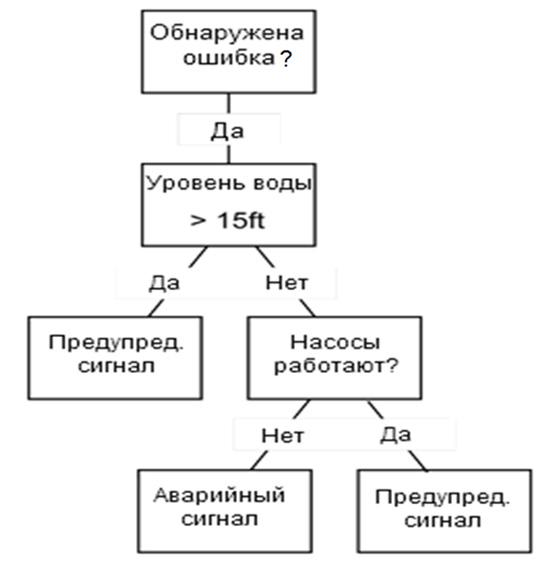

Decision trees

A decision tree is a structure that includes a root node, branches, and leaf nodes. Each internal node denotes a test on an attribute, each branch denotes the outcome of a test, and each leaf node holds a class label. The topmost node in the tree is the root node (fig. 6.2).

The following decision tree is for the concept buy_computer that indicates whether a customer at a company is likely to buy a computer or not. Each internal node represents a test on an attribute. Each leaf node represents a class.

Figure 6.2 - Decision trees

The benefits of having a decision tree are as follows:

- it does not require any domain knowledge;

- it is easy to comprehend;

- the learning and classification steps of a decision tree are simple and fast.

Tree pruning is performed in order to remove anomalies in the training data due to noise or outliers. The pruned trees are smaller and less complex.

Here is the Tree Pruning Approaches listed below:

- Pre-pruning − the tree is pruned by halting its construction early;

- Post-pruning - this approach removes a sub-tree from a fully grown tree.

The cost complexity is measured by the following two parameters:

- number of leaves in the tree;

- error rate of the tree.

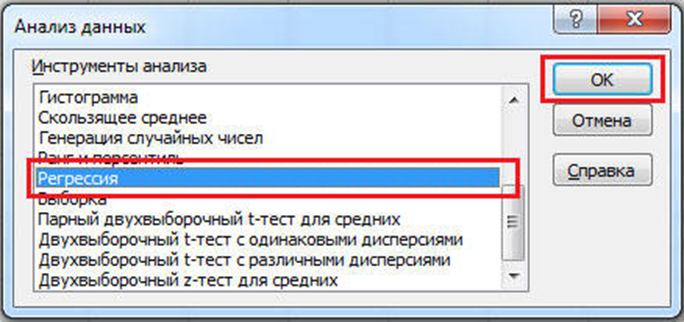

Analyzing data in Excel

Microsoft Excel is one of the most indispensable software products. Excel has a wide functionality that finds application in absolutely any field.

Possessing the skills of working in this program, you can easily solve a very wide range of tasks. Microsoft Excel is often used for engineering or statistical analysis.

The program provides for the possibility of setting a special configuration, which will significantly help to facilitate the task.

Let's consider how to include data analysis in Excel, what it includes and how to use it.

Figure 6.3 - Data analysis in Excel

The first thing to begin with is to install the add-in. The entire process will be examined using the example of Microsoft Excel 2010. This is done as follows. Click the "File" tab and click "Options", then select the "Add-ons" section. Next, find the "Excel Add-ins" and click on the "Go" button. In the opened window of available add-ins, select the "Analysis package" item and confirm the selection by clicking "OK".

Since you can still use the Visual Basic functions, it is also desirable to install the "VBA Analysis Package".

The installed package includes several tools that you can use depending on the tasks that you are facing. Let's consider the basic tools of the analysis:

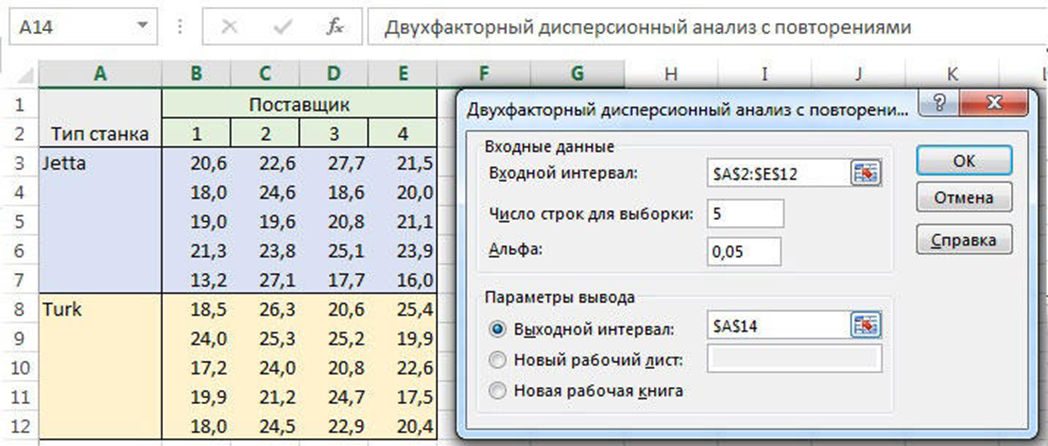

Dispersion analysis is a method in mathematical statistics aimed at finding dependencies in experimental data by examining the differences in mean values.

Dispersion analysis studies the effect of one or more independent variables, called factors, on the dependent variable.

Figure 6.4 - Dispersion analysis

Correlation analysis. Allows you to determine whether the values of one data group are related to the values of another group.

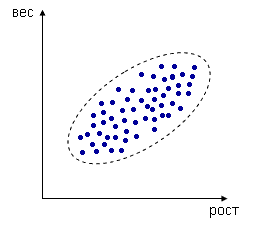

For example, we measure the height and weight of a person, each dimension is represented by a point in two-dimensional space.

Figure 6.4 - Correlation analysis

In spite of the fact that the values are random, a certain dependence is generally observed - the values correlate.

In this case, this is a positive correlation (with increasing one parameter, the second one also increases).

If the correlation coefficient is negative, this means that there is an opposite relationship: the higher the value of one variable, the lower the value of the other.

Theory of algorithms

Algorithm - a system of rules that describes the sequence of actions that must be done to solve the problem.

Origins of the term "algorithm" associated with the name of the Uzbek mathematician and astronomer al-Khwarizmi (IX century), who developed the rules of the 4 arithmetic operations on multi-valued decimal numbers.

Presentation of algorithms

Verbal form of the algorithm - a verbal description of the action sequences.

Graphic form - a representation of the algorithm in the form of individual geometric shapes.

The pseudo-code - a description of the algorithm on a (conditional algorithmic language, which includes the elements of the programming language and natural language phrases.

Writing in the programming language - a text on programming languages.

Consider example.

Finding the greatest number of the two numbers X and Y.

Consider the record of the algorithm in verbal form.

We introduce the variable Max for maximum storage.

1. Enter the values of the numbers X and Y;

2. Comparison of X and Y;

3. If X is less than Y, write in Max variable value Y;

4. If X is greater than Y, write in Max variable value X.

As seen from this example, a verbal description has the following disadvantages:

- Description of the algorithm is not strictly formalized;

- Recording turns verbose;

- Some instructions allow the ambiguity.

The most popular solutions for this problems is a graphical presentation of the algorithm in the form of block diagrams.

Internet technologies

Domain Name System

For Internet users, IP addressing is not very convenient, since the user must remember a lot of numbers. Therefore, a domain name system (DNS) was developed.

A domain name system is a distributed database that contains the addresses of computers connected to the Internet.

There are two types of top-level domains.

1. Top-level domains of general purpose.

com - Commercial organizations.

edu - Educational institutions.

gov - Government institutions.

For example, www.yahoo.com

2. Top-level domains of countries.

These include the domain names us, uk, kz, ru, etc.

For example, www.kstu.kz

Each resource on the Internet has a universal resource pointer (Uniform Resource Locator, URL).

Most URLs have the following form:

protocol: //host.domain/directory/file.name

where the protocol is the TCP / IP protocol that you want to use to get the resource (for example, HTTP, FTP, telnet, etc.).

Host.domain is the domain name of the host computer in which the resource is located.

Directiry is the directory name.

file.name is the name of the resource file.

For example, http://stars.ru/fact/k2.html

Mobile Internet

In the 1990s, the 3G standard began to be developed (the third generation). This is a technology based on mobile communication, which allows you to use the Internet anywhere that catches a cell phone.

The 3G standard provides multimedia services, high-speed communication and high-quality sound.

3G allows you to organize video telephony, watch movies and TV programs on your mobile phone, etc.

Generation 4G (fourth generation) networks began to be introduced beginning in 2010.

4G are promising technologies that allow data transfer with the speed exceeding 100 Mb / s mobile and 1 Gbit / s - fixed subscribers.

Multimedia technologies

Cloud technologies

Cloud technologies - is a convenient environment for storing and processing information. Cloud technologies are a combination of hardware, software, communication channels, as well as technical support for users.

Work in the "clouds" is aimed at reducing costs and improving the work efficiency of users.

The basic concept of "cloud" technologies is that information is stored and processed by means of a web server, and the result of these calculations is provided to the user through a web browser.

Cloud computing — is a technology of distributed data processing, in which computer resources are provided to the user as an Internet service.

A feature of cloud technologies is not attachment to a geographical area.

The client can work with cloud services from anywhere in the world and from any device that has Internet access.

Mobile technologies

Mobile technologies are spreading at an incredibly fast pace and to keep up with them, you need to track their appearance and learn in time. Just a few years ago we thought that there was nothing more beautiful than a stationary telephone, and today we no longer imagine life without a mobile phone. For today, to mobile information technologies it is possible to carry:

• GPS - satellite navigation system.

• GSM and UMTS - communication standards.

• WAP - a protocol by which you can access the Internet from your mobile phone.

• GPRS and EDGE - data transmission technologies.

• Wi-Fi - mobile wireless Internet networks.

• 4G - a new generation of communication.

GPS (Global Positioning System) is a satellite navigation system consisting of 24 satellites operating in a single network, located in 6 orbits about 17 000 km high above the Earth's surface.

GPS receivers do not transmit anything, but only receive data from satellites and determine their location by solving a mathematical problem.

To determine the coordinates of the point where you are, it is necessary that the GPS receiver sees at least four satellites. The more satellites the GPS receiver sees, the more accurately the coordinates are determined.

Satellite navigation in the mobile phone in just a few years has become the norm. Now users not only determine their coordinates, but also can volunteer to show where they are. GPS receiver is very convenient for motorists.

An important place is occupied by GPS in the work of rescue services. GPS allows you to significantly reduce the costs associated with the search operations and significantly reduce the time of rescue operations. The GPS-receivers used by these services cost about $ 3,000 and provide accuracy up to 1 m. There are even more expensive models that provide accuracy up to several centimeters!

GSM (Global System for Mobile Communications) is a global standard for digital mobile communications, with time and frequency division of channels. Developed under the auspices of the European Telecommunication Standardization Institute (ETSI) in the late 1980s.

GSM belongs to the networks of the second generation (2 Generation - 2G)

WAP technology in the mobile market appeared in 1997. WAP allowed installing programs on mobile phones directly from the Internet, without using a cable to connect to a computer.

Since that time, the process of "mobilizing" the society has begun - today every fifth user leaves the Internet exclusively from a mobile device.

G (1970 - 1984)

All the first cellular communication systems were analog. As a consequence, such a system has poor protection against interference and high energy consumption, so the 1G connection is no longer used, due to its critical shortcomings.

In the networks of this generation, only calls were possible. There were no such services as SMS, the Internet, and SIM cards, too, was not.

G (1980 - 1991)

The GSM standard belongs to the 2G generation - it allows you to use SIM cards, send messages and use the Internet.

In the second-generation standards, a digital signal transmission system is used to connect the phone to the base station. Compared to analogue systems (1G), they provide subscribers with a greater range of services, are protected from interference, are much better protected from listening.

G (1990 - 2002)

Был разработан стандарт UMTS, получивший наибольшее распространение. Достоинства:

- высокое качество звука и низкий уровень фоновых шумов;

- повышенная ёмкость системы (количество абонентов) в 3-5 раз больше, чем в GSM;

- обеспечивается полная защита от несанкционированного подключения;

- уменьшение влияния на организм человека, а значит, телефон можно носить и в кармане и класть под подушку перед сном.

Стандарты связи третьего поколения обеспечивают принципиально новые услуги на базе высокоскоростной передачи данных:

- видеотелефония – позволяет увидеть собеседника во время разговора;

- мобильное телевидение – позволяет просматривать телепередачи на экране мобильного телефона;

- видеонаблюдение за удалёнными объектами с дисплея сотового телефона;

- высокоскоростной беспроводной доступ к Интернету.

G (2000 - 2010)

The main difference of the fourth generation networks from the third one is that the 4G technology is completely based on packet data protocols.

The fourth generation of mobile communication, characterized by a high data transfer rate (up to 100 Mbps.) And enhanced voice quality.

4G networks only provide access to the Internet. Call through 4G can not (you can call only through Internet services - Skype, Mail.ru Agent, WhatsApp, etc.).

G (2014 - 2017)

Technologies for 5 Generation 5G networks as of the end of 2014 are in a state of development. The main developer is Huawei.

The main task for networks of the fifth generation will be to expand the spectrum of frequencies used and increase the capacity of networks. It is expected that the new technology will solve the problem, which all operators in the world are working on - to improve the efficiency of the network infrastructure.

Gyroscope Sensor (Гироскоп)

By its design, the gyroscope in mobile phones resembles the classic rotary ones, which are a fast rotating disk fixed on movable frames. Even if the position of the frames in the space is changed, the axis of rotation of the disk will not change. Due to the constant rotation of the disk, for example, using an electric motor, and it is possible to constantly determine the position of the object in space, its slopes or rolls.

Gyroscopes built into mobile phones make the quality of games the highest. With this sensor to control the game you can use not only the usual rotation of the device, but also the speed of rotation, which provides more realistic control.

Mobile operating systems

The life of modern man is inconceivable without mobile devices. Their quality and usability depend on the hardware characteristics and operating system.

The most common operating systems for mobile devices:

- Android.

- iPhone OS.

- Windows Mobile.

Android is a mobile operating system based on the Linux operating system.

Pluses:

• open source;

• high speed;

• convenient interaction with Google services;

• multitasking.

Disadvantages:

• many current versions;

• The new version requires improvements.

iPhone OS is a mobile operating system from Apple.

Pluses:

• ease of use;

• quality support service;

• regular updates, eliminating many problems in the work.

Disadvantages:

• lack of multitasking;

• There is no built-in document editor.

Windows Mobile is a multi-tasking and multi-platform OS.

Pluses:

• similarity with the desktop version;

• Convenient synchronization;

• Office programs are included.

Disadvantages:

• high requirements for equipment;

• the presence of a large number of viruses;

• instability in work.

Information Security

Information Security - is a set of tools, strategies, and principles that can be used to protect the cyber environment, organization, and users from hackers.

There are programs to protect against hacking, backup systems that are used to protect the information environment and resources of organizations from intruders.

In modern conditions the problems of cybersecurity are shifting from protection of information on a separate object to the level of creation of system of cyber security as an part of information and national security.

Basic security tasks

Confidentiality (конфиденциальность) – protection of important information;

Availability (доступность) - guarantee that authorized users will have access to information;

Integrity (целостность) - protection against failures and viruses, leading to loss of information or its destruction.

Availability of information

All measures to ensure information security are useless if they make it difficult for legitimate users.

Here, reliably working authentication comes to the fore and properly implemented separation of user rights.

Ensuring data integrity

Today, all commercial information, accounting data, financial statements, plans, etc., are stored in the local computer network.

In such circumstances, information security provides a system of measures that are designed to ensure reliable protection of servers and workstations from failures and breakages leading to the destruction of information or its partial loss.

Cybersecurity of Kazakhstan

The Ministry of defence and aerospace industry has been established in Kazakhstan, to which all information security functions have been transferred.

This decree was signed on October 6, 2016 by the President of the Republic of Kazakhstan.

January 31, 2017 President Nursultan Nazarbayev in his traditional message to the people of Kazakhstan instructed the government and the National Security Committee to take measures to create a system "Cybershield of Kazakhstan."

Computer viruses

Computer viruses are specially written programs that can "multiply", covertly inject their copies into files, boot sectors of disks and perform other undesirable actions.

After infection of the computer, the virus can activate and begin performing harmful actions to destroy programs and data.

Activation of the virus can be associated with various events:

- Onset of a certain date;

- Running the program;

- The opening of the document.

The first known viruses are Virus 1,2,3 and Elk Cloner appeared in 1981. In the winter of 1984, the first anti-virus utilities appeared.

The first virus epidemics occurred in 1986, when the Brain virus "infected" floppy disks for the first personal computers. On Friday, May 13, 1988, the Jerusalem virus appeared, which destroyed programs when they were launched.

In 2004, unprecedented epidemics were caused by the viruses MsBlast (according to Microsoft - more than 16 million systems), Sasser (estimated damage 500 million dollars) and Mydoom (estimated damage 4 billion dollars).

Client-server technology

A server is a computer that provides its resources to the general user.

A client is a computer using a server.

The client sends a request to the server, displays the response received from the server. The server receives requests from clients, executes queries and sends a response with results.

Network Cables

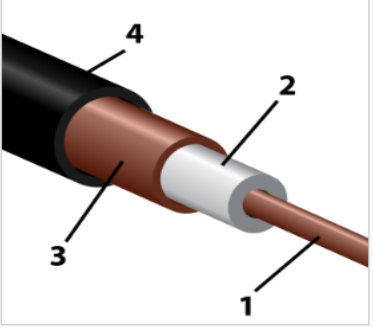

Coaxial cable. This is one of the first conductors used to create networks (fig. 12.1).

The maximum data transfer rate is 10 Mb / s.

The cable is strongly susceptible to electromagnetic interference.

Coaxial cable assembly

1- inner conductor;

2- insulation;

3- screen in aluminum braid;

4-shell.

Figure 12.1 – Coaxial cable

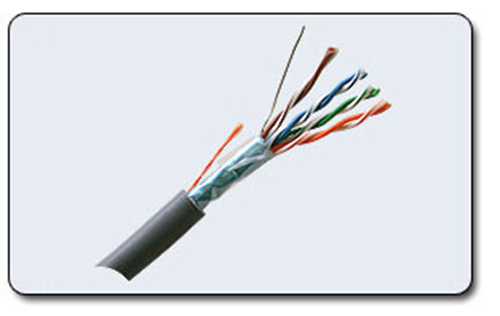

Twisted Pair. Currently, this is the most common network cable (fig. 12.2).

There are several categories of cable, which are numbered from 1 to 8 and determine the effective transmitted frequency range. The larger the category, the higher the data transfer rate.

Figure 12.2 – Twisted pair

When building local networks, a cable of the 5th category is used. The cable supports up to 100 Mbps data rate when using 2 pairs and up to 1 Gb / s when using 4 pairs.

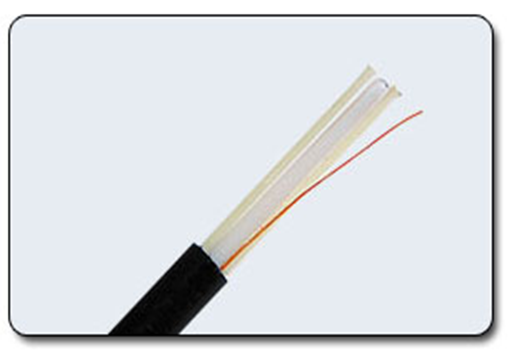

Fiber optic cable. The cable contains optical fibers protected by plastic insulation (fig.12.3). The data transfer rate is from 1 to 10 Gbit / s. Absolutely not subject to interference.

Figure 12.3 – Optic cable

A network card is a peripheral device that allows a computer to communicate with other computers on the network.

According to the constructive implementation, the network cards are divided into:

• Built-in motherboard;

• Internal boards inserted into the slots of the motherboard;

• External boards that connect via USB interface.

The network card contains a microprocessor that encodes-decodes network packets. Each card has its own Mac address.

The MAC address (Media Access Control) is a unique number for network equipment.

The MAC address allows you to identify each computer on the network in which the network card is inserted.

Hubs have several ports to which computers are connected. Concentrators are active and passive. Active hubs amplify and restore signals. Passive concentrators do not require power connection.

Switch is an intelligent device, where there is a processor and a buffer memory. The switch analyzes the Mac address, where and where the packet of information is sent and connects only these computers. This allows you to greatly increase network performance.

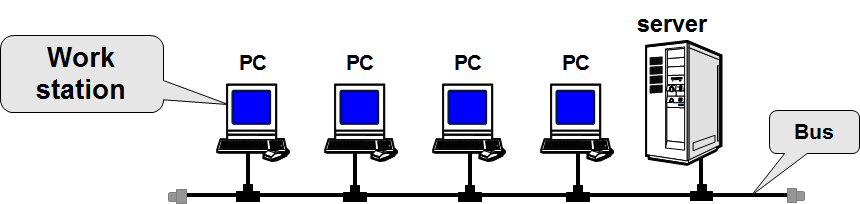

Bus network

Figure 12.4 – Bus network

Pluses:

• simplicity, low cable consumption;

• Easily connect workstations;

• If the PC fails, the network operates.

Disadvantages:

• When the bus breaks, the network breaks down;

• One communication channel, transmission in turn;

• Conflicts of simultaneous data transfer are possible.

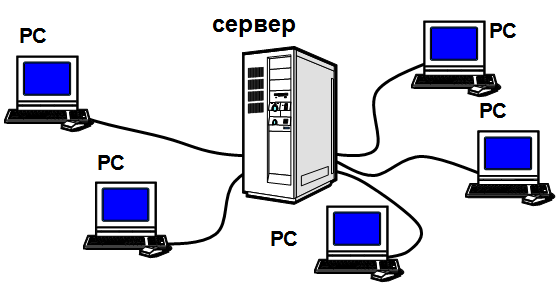

Star network

Figure 12.5 – Star network

Pluses:

• a single management center;

• A cable break does not affect the operation of the network.

Disadvantages:

• high cable consumption;

• limit the number of clients (up to 16).

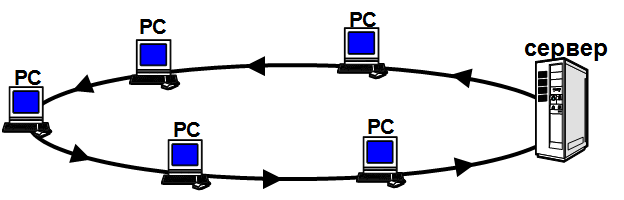

Ring network

Figure 12.6 – Ring network

Pluses:

• high level of security;

• computers stand as a rapper.

Disadvantages:

• If any computer fails, the network does not work;

• It is difficult to connect a new PC.

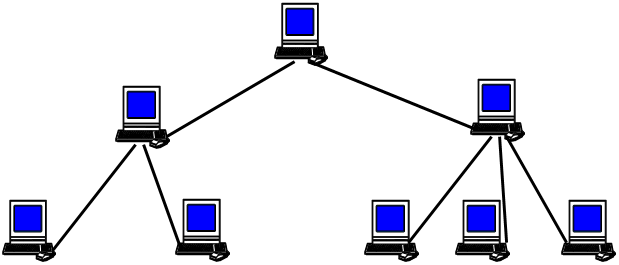

Tree network

Figure 12.7 – Tree network

In such a network, there is only one path between any two nodes.

The tree topology has the necessary flexibility to cover the network with several floors in the building.

Network Connections

A router is a network device that allows you to transfer packets from one network to another.

When transmitting information, the router uses the destination address specified in the data packets and determines the path by which the data should be transmitted from the routing table. If the routing table for the address does not have the described route, the packet is discarded.

In the residential sector, routers are usually used to connect the home network of computers to the communication channel of the Internet provider.

The modern router has a number of auxiliary functions:

• Wireless access point Wi-Fi;

• Firewall to protect the network from external attacks;

• Web interface for easy device configuration.

Bridge - a network device designed to combine the segments of a computer network of different topologies into a single network.

However, unlike the router, the Network bridge does not have a routing table and is a highly configurable device

A gateway is a network device designed to connect two networks that use different protocols.

One of the most common ways to use the gateway is to provide access from the local network to the Internet.

Wi-Fi technology

Wi-Fi is one of the modern forms of wireless communication. The technology was created in 1991.

The maximum signal transmission distance in such a network is 100 meters, but in open terrain it can reach high values (up to 300-400 m).

Typically, a Wi-Fi network scheme contains an access point and several clients.

An access point is a base station designed to provide wireless access to a network.

All Wi-Fi equipment can be divided into two large classes:

• Active WiFi equipment (access points and Wi-Fi routers);

• Wi-Fi adapters (clients).

Advantages of Wi-Fi

• No need to lay the cable;

• Convenient for users with laptops;

• Allows network access to mobile devices;

• Emission from Wi-Fi devices at the time of data transfer is 100 times less than that of a cellular phone.

Disadvantages of Wi-Fi

• Low security of data exchange;

• Low noise immunity;

• In the 2.4 GHz band, there are many devices, such as devices supporting Bluetooth, microwave ovens, etc., which degrades the quality of communication.

A laptop or computer can have a built-in WI-FI adapter. External WI-FI adapter connects via USB port to both desktop computers, laptops, tablets, etc. In appearance, the device looks like a USB flash drive.

Smart technology

Smart technology — this guide is for the correct setting of a goal that can be used to organize a business and improve one's personal life.

To apply it, it is necessary to analyze information, set clear deadlines for achieving the goal, mobilize resources and strictly follow the plan.

Each company must establish business goals in order to increase profits, develop and move forward.

In large companies for a long time using this method, which is the main secret of success.

A good business can not be built without the ability to set clear objectives, for the implementation of which it is required to set a goal and implement it in the given time frame.

The success of large corporations suggests that SMART technology is also useful for improving the quality of life of ordinary people.

SMART is a well-known and effective technology for setting and formulating goals.

SMART means a smart goal and combines capital letters from English words, indicating what the real goal should be:

Specific (конкретный);

Measurable (измеримый);

Achievable (достижимый);

Relevant (значимый);

Time-bound (ограниченный во времени).

To put the task of SMART, you must first answer six questions:

Who – who is participating?

What – What I want to achieve?

Where – In what place it will be?

When – Set the timeframe.

Which – define restriction.

Why – Why is this necessary?

Electronic business

Electronic business is a form of doing business, in which a significant part of it is done using information technologies (local and global networks, specialized software, etc.).

Electronic business includes: sales, marketing, financial analysis, payments, employee search, user support, support, etc.

The business model is a visual diagram that organizes the project components into a single system aimed at generating income. Creating a business model is useful in that it allows you to quickly and at the same time be able to depict the idea of the project and the mechanisms that will be used to generate profits.

Consider the business model proposed by the theorist in the field of business process modeling A. Osterwalder. The business model consists of eight blocks:

• Value propositions - what are the main products or services that can be used on the site or through the site, and what value do they have for the user?

• Key activities - what actions need to be taken to create / maintain value for the user?

• Consumer segments - for which of all Internet users is your site intended? Who is in your target audience?

• Key resources - what resources are needed to maintain and develop the site?

• Relationship with customers - how will feedback be provided to users?

• Sales channels - where and how will the user be able to find your site and purchase your product or service?

• Cost structure - what cash costs will appear in connection with the maintenance of the site?

• Income revenue streams - which mechanisms will allow you to profit from the site?

An important part of e-business is e-commerce.

E-Commerce is a new procedure of modern business which addresses the need of business organizations. It reduces the cost and improve the quality of goods and services while increasing the speed of delivery. The business derives many benefits like non-cash payment, better marketing management of product and services, 24/7 service, improves brand image of company, reduce paper work and enable business to provide better customer service.

All actors interacting on the market can be divided into 3 categories:

• Business - legal persons, companies, enterprises;

• Consumer (customer) - ordinary citizens, consumers of certain services;

• Government) - state structures.

From these categories we get the letters included in the abbreviations:

B - business.

C - consumer.

G - government.

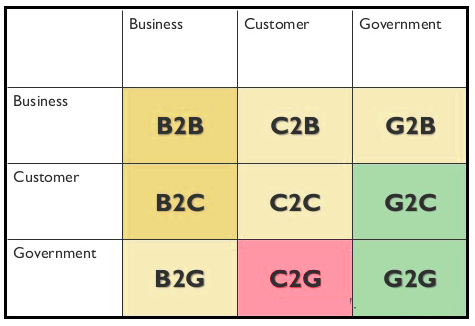

The business model in e-commerce include (fig. 13.1) Business to Business(B2B), Business to Consumer(B2C), Consumer to Consumer(C2C), Consumer to Business(C2B), Business to Government (B2G), Government to Business(G2B), Government to Citizen(G2C).

Figure 13.1 – Business Models in E-Commerce

E-learning

E-learning is a system of learning with the help of information and electronic technologies.

The term "e-learning" first appeared in 1995. There is a definition that was given by UNESCO specialists: "e-Learning - learning through the Internet and multimedia".

E-learning is a technology for gaining knowledge through the use of IT.

E-learning include:

- independent work with electronic materials, using a personal computer, PDA, mobile phone, DVD-player,TV and other;

- obtaining advice, tips, ratings from a remote (geographically) expert (teacher), for remote interaction;

- creation a distributed user community (social networks), leading the overall virtual learning activities;

- timely Hour delivery of e-learning materials; standards and specifications for electronic learning materials and technology, distance learning tools;

- formation and improvement of information culture for all heads of enterprises and divisions of the group, and the mastery of modern information technology, improving the efficiency of its ordinary activities;

- the development and promotion of innovative educational technologies, the transfer of their teachers;

- to develop educational web-resources;

- the opportunity at any time and in any place to get modern knowledge, are available in any part of the world access to higher education for persons with special needs;